Development of Real-Time Cancer Diagnostics equipment using Auditory-Feedback

Overview:

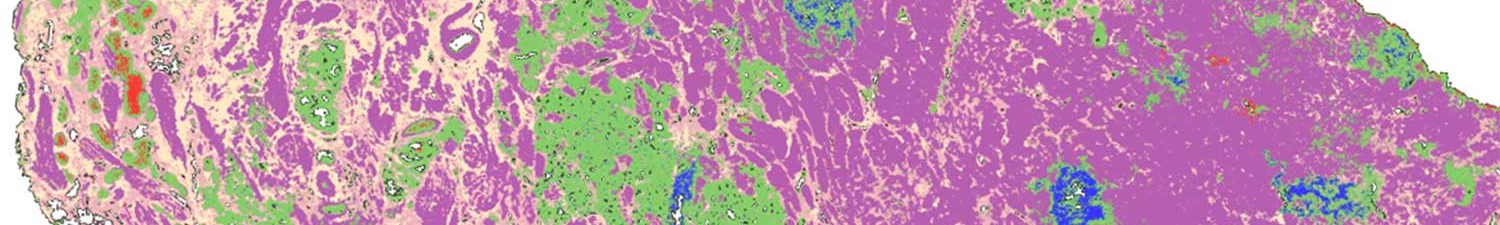

Diagnosing cancer at an early stage is vitally important as it can make the procedure for treatment much simpler and can dramatically improve the patient’s chance of survival. When diagnosing cancerous cells, clinicians are often required to manually classify samples using subjective techniques. Visually, this is very difficult as there are very few observable differences between the data taken from cancerous and non-cancerous cells. We can therefore extract some of the most statistically salient properties of the cell data and convert them to sound. This not only allows us to provide a more perceptually relevant representation of the data, it also frees up the clinician’s visual sense, allowing them to control a probe more accurately and to analyze the stimuli for physical abnormalities.

To provide this form of auditory feedback, we need to overcome three key problems

- The first problem relates to the extraction of statistically relevant features from the cell-data. To overcome this, we need to gain a very accurate representation of the data classes (cancerous and non-cancerous).

- The second problem refers to the way in which we convert the cell’s statistically relevant features into perceptually relevant sound parameters. We can use a number of synthesis techniques to do this, but ultimately, we aim to provide a stimulating waveform to the surgeon, which is not distracting and provides clear discrimination between the two types of cell.

- Finally, we need to be able to perform all of this in real-time, allowing clinicians to perform in vivo diagnostics procedures. Currently, techniques for diagnosing cancerous cells require advanced dimensionality-reduction-based machine learning processes, thus adding large processing times onto the derivation of results. With the current standard of computing, this means these techniques add too much time to the procedure to give a fast representation of a cell’s class.

Ultimately, a system based around auditory feedback would require a minimal amount of computationally expensive processing, with high classification accuracy and a perceptually relevant representation.

This project will be performed in collaboration with Dr Matthew J Baker, Bioanalytical Sciences Research Group, University of Central Lancashire

For further information please see below or contact Dr Ryan Stables (ryan.stables@bcu.ac.uk) or Professor Cham Athwal (cahm.athwal@bcu.ac.uk)

http://www.bcu.ac.uk/tee/research/phd-mphil/bursaries#dmtlab

Please apply via the link below

http://www.jobs.ac.uk/job/AIM750/phd-studentships/